Staying One Step Ahead: Unleashing the Potential of Compressed Optimized Neural Networks

Key Takeaways:

In the fast-evolving landscape of technology, artificial intelligence (AI) has emerged as a powerful force capable of transforming various industries. Neural networks, a fundamental component of AI systems, have demonstrated great potential for research, medicine, finance, and beyond. However, as we harness their power to drive innovation, we must also remain vigilant about the security vulnerabilities they may expose us to. As adversaries seek to exploit weaknesses in neural networks, it becomes crucial to understand and counter adversarial attacks effectively.

The Dangers of Adversarial Attacks

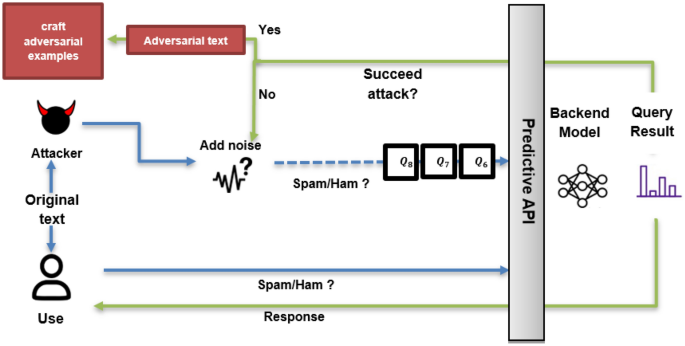

Adversarial attacks are malicious attempts to deceive or manipulate a neural network’s behavior. By introducing carefully crafted inputs or alterations to the data, attackers can mislead the neural network’s decision-making process, leading to potentially severe consequences. Consider the example of an autonomous vehicle relying on image recognition to identify pedestrians, signs, and obstacles. In an adversarial attack scenario, an attacker could subtly alter an image or introduce noise that results in misclassifications, causing the vehicle to make harmful decisions.

The impact of adversarial attacks extends far beyond autonomous vehicles. Banking systems, online security measures, and even healthcare applications that heavily rely on neural networks might find themselves vulnerable to such attacks if proper precautions are not taken. Therefore, it becomes imperative to adopt proactive measures to mitigate the risks associated with adversarial attacks.

Unleashing the Power: Compressed Optimized Neural Networks

One approach gaining increasing traction in neutralizing adversarial attacks is the use of compressed optimized neural networks. These networks are designed to improve both performance and security, all while reducing the complexity and resource demands of neural network models.

Through a refined compression process, redundant and irrelevant parameters of the neural network are eliminated, resulting in a compact and efficient model. This optimization not only reduces the vulnerability to adversarial attacks but also makes neural networks more compact and faster to execute. By reducing model size and complexity, compressed optimized neural networks provide enhanced resistance against adversarial perturbations.

Additionally, compressed optimized neural networks benefit from efficient algorithms that improve training and inference times. These algorithms optimize the model’s architecture, parameters, and kernel size, making it inherently more robust against adversarial manipulations. Thus, the combination of compression and optimization techniques equips neural networks with an added layer of defense against potential adversarial attacks.

Implementing Effective Security Measures

While the adoption of compressed optimized neural networks is a proactive step, it is essential to couple this approach with a comprehensive security framework. Here are a few key strategies to implement:

1. Regular Monitoring and Testing

To stay ahead of potential adversarial attacks, ongoing monitoring and testing are critical. Continuously assess the network’s strengths and weaknesses, identify vulnerable areas, and take proactive measures to address them.

2. Robust Data Preprocessing

Adversarial attacks often rely on subtle modifications to the underlying data. To counter this, invest in robust data preprocessing techniques. Cleaning, normalizing, and validating inputs can significantly reduce the likelihood of successful attacks.

3. Adversarial Training

Incorporate adversarial training during the model’s development phase. By introducing benign adversarial examples during the training process, the model can learn to recognize and mitigate potential adversarial manipulations.

4. Enforcing Input Validation

Implement strict input validation checks to detect potential adversarial inputs. Consider employing anomaly detection techniques that flag inputs significantly deviating from standard patterns, protecting the neural network from potential harm.

5. Regular Model Updates

As new attack methodologies emerge, it’s crucial to keep neural network models up to date. Regularly update and enhance security measures to respond to evolving adversarial tactics.

Conclusion

In the era of artificial intelligence, staying ahead of adversarial attacks is paramount. By combining the resource efficiency of compressed optimized neural networks with a robust security framework, organizations can better protect their systems from malicious actors seeking to exploit vulnerabilities.

Frequently Asked Questions

Source: insidertechno.com