Key Takeaways:

Machine learning is a complex and often misunderstood concept, but it plays a crucial role in various industries.

Explainability, interpretability, and observability are three important aspects of machine learning that help us understand, trust, and improve the performance of ML models.

By demystifying these concepts, we can unlock the full potential of machine learning and leverage its power in creating innovative solutions.

Demystifying Machine Learning: Unraveling Explainability, Interpretability, and Observability

The field of machine learning has rapidly grown over the past decade, revolutionizing industries such as healthcare, finance, and retail. Machine learning algorithms have the potential to uncover valuable insights from large datasets, automate tasks, and improve decision-making processes. However, despite its many benefits, there are still challenges associated with machine learning, such as ensuring the transparency and reliability of the models.

In this article, we’ll delve into three key concepts that help address these challenges: explainability, interpretability, and observability. By understanding these concepts, we can demystify machine learning and harness its power effectively.

Explainability: Understanding the Black Box

One of the biggest concerns with machine learning is its “black box” nature. Traditional algorithms, such as linear regression or decision trees, provide us with clear insights into how they arrive at their predictions. However, many modern ML models, such as deep neural networks, are incredibly complex and difficult to interpret. It’s crucial to have explainability to understand the inner workings of these models, gain insights, and address potential biases or ethical concerns.

The Importance of Explainable Artificial Intelligence

Explainable artificial intelligence (XAI) strives to bridge the gap between model complexity and human interpretable output. By providing explanations for ML predictions, we can gain valuable insights into how the model arrived at a particular decision. This transparency increases trust, allows for bias detection, and facilitates error identification and correction processes.

Moreover, XAI is essential for accountability and compliance in highly regulated industries. For instance, financial institutions must be able to explain why a loan application was rejected or accepted, as per regulatory requirements. Explainability enables organizations to make fair and responsible decisions, avoiding black-box-driven actions.

Methods for Achieving Explainability

There are various techniques and methods to achieve explainability in machine learning models, including:

1. Rule-based approaches: These approaches generate interpretable “if-then” rules based on the model’s decision-making process. This method allows us to understand decisions within the context of human-understandable rules.

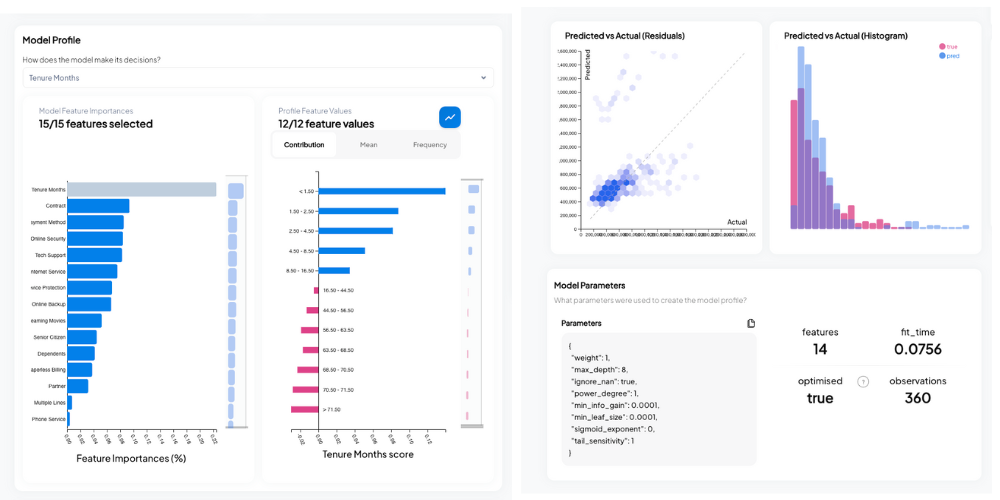

2. Feature importance analysis: By examining the relative importance of model features for predictions, we can obtain insights into what factors the model considers when making decisions.

3. Local interpretability: This method focuses on explaining individual predictions rather than the entire model. Techniques, such as LIME (Local Interpretable Model-Agnostic Explanations), provide interpretable explanations for each prediction, guiding us in understanding the thought process behind specific model outputs.

Overall, incorporating explainability in machine learning helps us trust the model’s predictions, identify and rectify biases, and improve the transparency of AI-powered systems.

Interpretability: Decrypting the Inner Workings

While explainability concentrates on gaining insight into specific predictions, interpretability aims to understand the behavior and inner workings of the entire machine learning model. Interpretability focuses on answering questions such as: What components contribute to a model’s decision? Which features are most relevant?

The Role of Feature Importance

Feature importance plays a pivotal role in interpretability. By quantifying the contribution of each input feature to a model’s predictions, we can identify which factors the model deems as significant. This information allows us to understand the problem better, detect spurious correlations, and refine the model accordingly.

In addition to feature importance, dimensionality reduction techniques, such as Principal Component Analysis (PCA), can aid interpretability by capturing important patterns in high-dimensional data. By reducing data dimensions, we can visualize and comprehend the data more effectively.

Interpretability Techniques for Complex Models

Complex machine learning models, such as deep learning architectures, pose challenges for interpretability due to their large number of layers and parameters. However, several techniques help us grasp their inner workings:

1. Activation maximization: This technique visualizes the features that maximize the activation of specific neurons. By observing which patterns excite neurons, we can infer their role in the decision-making process.

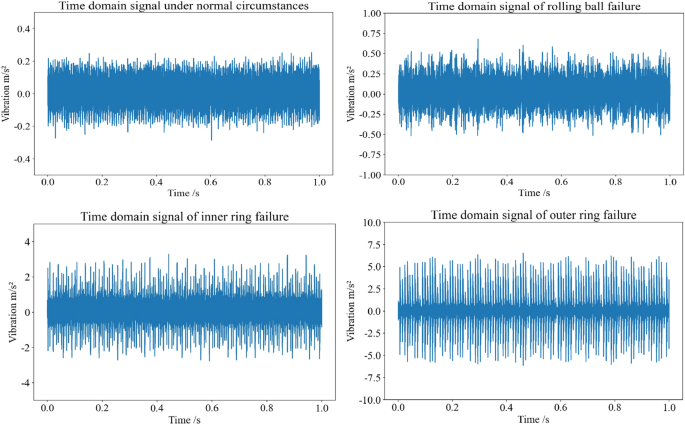

2. Sensitivity analysis: Sensitivity analysis involves determining how small changes in input features affect model predictions. This method helps us identify critical areas of input data that have the most significant impact on outputs.

3. Gradient-based methods: By analyzing the gradients of the model with respect to the input features, we can understand how small perturbations in input data translate to changes in the model’s outputs.

These strategies enable us to extract meaningful insights from complex ML models, understand their inner workings, and ensure they align with our expectations.

Observability: Shedding Light on Model Behavior

Observability is the ability to monitor and understand the behavior of machine learning models in real-world scenarios. It involves verifying that the models respond as intended and detecting any performance degradation or malfunctions over time.

The Need for Monitoring and Error Detection

As machine learning models are deployed in various applications, from intelligent personal assistants to critical healthcare systems, it’s vital to monitor their performance continuously. Unexpected behavior or degradation in model performance can lead to severe consequences, such as providing incorrect medical recommendations or biased outputs.

To ensure observability, monitoring frameworks, and metrics can be implemented. These frameworks enable data scientists and stakeholders to keep track of metrics, such as accuracy, precision, or recall. Additionally, they help detect anomalies and enable prompt actions when models start behaving unexpectedly.

Debugging and Iteratively Improving Models

Observability facilitates the debugging and iterative improvement process of machine learning models. By analyzing log files, error patterns, and A/B testing results, data scientists can identify sources of errors or limitations in their models. This information helps them steer their efforts towards model refinement and optimization.

Moreover, observability of deployed models strengthens the feedback loop between data scientists and domain experts. Effective feedback allows for model adaptability and keeps them aligned with the ever-changing requirements and constraints of real-world scenarios.

Conclusion

Machine learning is a powerful tool that reshapes industries and enhances decision-making processes. Explaining, interpreting, and observing machine learning models is crucial to ensuring trust, increasing transparency, and addressing biases. By leveraging the concepts of explainability, interpretability, and observability, we can fully unleash the potential of machine learning, empower decision-makers, and create responsible AI-powered solutions.

Frequently Asked Questions

Q: How does explainability help address bias in machine learning models?

A: Explainability allows us to identify biases in model predictions by understanding why certain decisions were made. With this knowledge, we can introduce corrective measures and ensure the models are fair and unbiased.

Q: Can interpretability techniques be applied to any machine learning model?

A: While some interpretability techniques are model-agnostic, others are more suited to specific model architectures. Nevertheless, there are methods available for interpreting a wide range of machine learning models with varying complexity.

Q: How frequently should machine learning models be monitored?

A: Machines learning models should be continuously monitored to detect any performance degradation or anomalies. Regular monitoring ensures that models are performing as intended and allows for timely adjustments and improvements.

Q: Can observability help prevent the deployment of faulty machine learning models?

A: Yes, observability provides insights into the performance of machine learning models in real-world scenarios, allowing for the identification of potential issues before deployment. This reduces the chances of deploying faulty or subpar models.

Q: Why is observability particularly important for critical applications?

A: In critical applications, such as healthcare or autonomous driving, the consequences of model errors can be severe. Observability ensures that potential issues are promptly detected and addressed, reducing the risk of harm caused by the models.